Facebook’s News Feed Update Relies on Unreliable Signals

Like pretty much everyone else in journalism I’ve been thinking a lot about Facebook’s big News Feed announcement.

In case you missed it, content posted by publishers and institutional players will now be downplayed in the News Feed in favor of stuff your friends and family share and comment on. The result will be fewer articles from newsrooms and (shudder) brands, and more stuff from actual people like your Aunt Susie. Fair enough. It’s named after a face book for a reason, right?

Facebook says they’re doing this to address the “well-being” of their users. Mark Zuckerberg uses the word multiple times in his post about the announcement. What they don’t say is “fake news” or address how these changes might stop bad guys from manipulating their network like they did during the U.S. Presidential election and Brexit (and likely elsewhere, too). Which is odd because those are clearly the precipitating events that led to these changes. And there’s good reason for concern, because this update may do little to stop it all from happening again.

Under this new arrangement “engagement” becomes the key factor in determining what users see. Publishers will still be able to post their stories, but it will take comments, shares, and “likes” from friends and family on those posts for them to rise to the top. In Facebook’s own words, “Posts that inspire back-and-forth discussion in the comments and posts that you might want to share and react to.” That means Facebook will be basing their revamped feed on a process that responds to emotional reactions.

That’s a setup that talks straight to the lizard brain. Moreover, it has a weak correlation with value. I’m talking about the good-for-people-and-society kind of value, not good-for-Facebook’s-bottom-line value. Josh Benton nails it in his post on NiemanLab:

[A]t a more practical level, it seems to encourage precisely the sort of news (and “news”) that drives an emotional response in its readers — the same path to audience that hyperpartisan Facebook pages have used for the past couple of years to distribute misinformation. Those pages will no doubt take a hit with this new Facebook policy, but their methods are getting a boost.

Content that “starts conversation” isn’t automatically good content. Just because live videos “get six times as many interactions as regular videos” doesn’t mean they’re any better for us. It just means we’re reacting to them more. Facebook needs to know why we’re reacting, and incorporate more objective elements into their algorithms instead of relying on subjective emotional ones. Otherwise they’re leaving the door wide open to still more social poison.

Building those sorts of objective filters is precisely where Facebook has struggled in the past. Efforts to halt “engagement bait” have focused on cursory signals that have proved ineffective against more calculated efforts from troll farms and the like.

And then there are the unintended consequences. For that I’ll cite a very recent personal example.

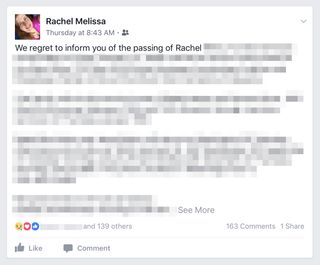

Last week a member of my wife’s extended family passed away. She had been active on Facebook, and when she died someone updated her page to let us know what happened and when and where the funeral services would be. Because this post keeps getting “reactions” and comments it keeps appearing at the top of my feed, helpfully reminding me of her death over and over again, days after the fact.

Keep in mind this is a company that knows how often I log in, if I’ve seen this post and—yes—if I’ve “engaged” with it. Even with that information they aren’t getting it right. Why? Because engagement alone is too brittle a determining factor, and responds too easily to the wrong signals.

As for publishers, they’ll still be right in the thick of it, hunting for new ways to make their content popular under this latest set of conditions. It’s naive to think they’ll simply walk away. Even a reduced slice of billions of users is too rich a prize for them to ignore.

Institutional content will still get shared on Facebook. Virtuous publishers and ne’er-do-wells alike will still fight like hell for it to be their content that gets shared. Facebook has to get better at telling the good from the bad and incorporate tools that know the difference in order for this to work.